Aim & Scope

We encourage submissions that cover a broad range of visual understanding and generation tasks, including but not limited to image classification, object detection, instance/semantic/panoptic segmentation, keypoint/pose estimation, action recognition, visual grounding and VQA, tracking, retrieval, captioning, image/video generation and editing, 3D perception and reconstruction, and embodied/robotic perception.

Potential Topics of Interest

- Parameter-efficient adaptation: methods for adapters/LoRA, visual prompt tuning, soft/hard prompts, hypernetworks, and rank/structure selection that enable rapid specialization under tight compute and memory budgets.

- Instruction & preference tuning for vision/VLMs: techniques for building and using instruction datasets, pairwise/listwise preference learning (e.g., RL-free or RL-based objectives), and aligning models to human or task-specific criteria for recognition, localization, captioning, and generation.

- Inference-time & test-time adaptation: strategies that adapt on the fly—entropy/objective-driven updates, batch-norm or feature alignment, online/episodic adaptation, retrieval-augmented inference, self-consistency, verifier/rescorer pipelines, and dynamic prompt optimization.

- Multi-modality, 3D, and temporal modeling: post-training across image–text–audio, video and action understanding, point clouds and meshes, 3D reconstruction, and cross-modal grounding.

- Besides, topics on small vision-language models are also welcome for tpyical vision tasks.

Important Dates

Submission Guidelines

Submitted papers should present original, unpublished work, relevant to one of the topics of the Special Issue.

All submitted papers will be evaluated on the basis of relevance, significance of contribution, technical quality, scholarship, and quality of presentation, by at least two independent reviewers. It is the policy of the journal that no submission, or substantially overlapping submission, be published or be under review at another journal or conference at any time during the review process.

Manuscripts must conform to the author guidelines available on the IJCV website at: IJCV Submission Guidelines.

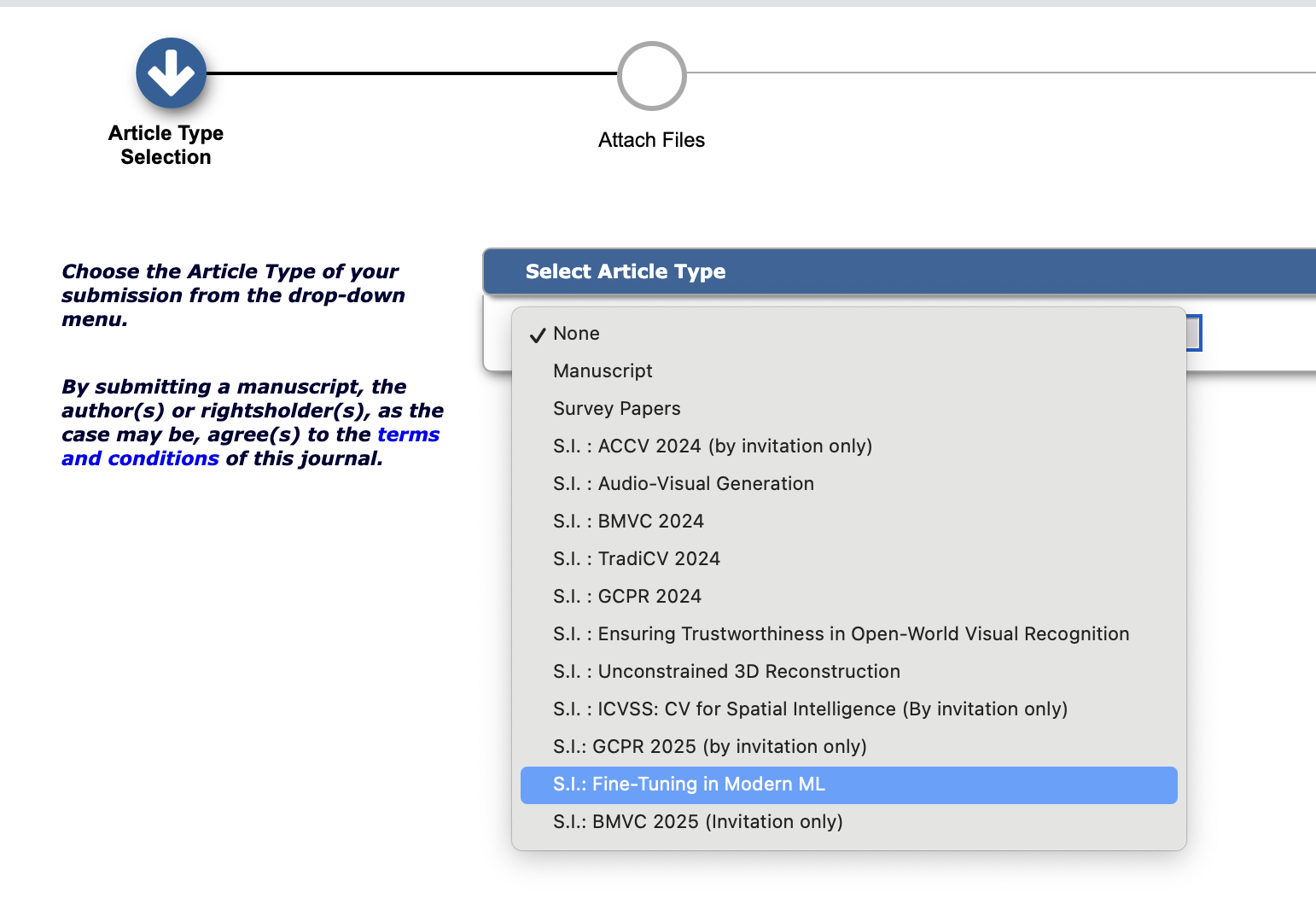

Please choose “S.I. : Fine-Tuning in Modern Machine Learning” from the Article Type dropdown.

Submit Your PaperAuthor Resources

Authors are encouraged to submit high-quality, original work that has neither appeared in, nor is under consideration by other journals. Springer provides a host of information about publishing in a Springer Journal on our Journal Author Resources page, including FAQs, Tutorials along with Help and Support.

Other useful links for authors:

Ethical Responsibilities of Authors

This journal is committed to upholding the integrity of the scientific record. As a member of the Committee on Publication Ethics (COPE) the journal will follow the COPE guidelines on how to deal with potential acts of misconduct.

Authors should refrain from misrepresenting research results which could damage the trust in the journal, the professionalism of scientific authorship, and ultimately the entire scientific endeavour. Maintaining integrity of the research and its presentation is helped by following the rules of good scientific practice, which include:

- The manuscript should not be submitted to more than one journal for simultaneous consideration.

- The submitted work should be original and should not have been published elsewhere in any form or language (partially or in full), unless the new work concerns an expansion of previous work. (Please provide transparency on the re-use of material to avoid the concerns about text-recycling (‘self-plagiarism’).

- A single study should not be split up into several parts to increase the quantity of submissions and submitted to various journals or to one journal over time (i.e. ‘salami-slicing/publishing’).

- Concurrent or secondary publication is sometimes justifiable, provided certain conditions are met. Examples include: translations or a manuscript that is intended for a different group of readers.

- Results should be presented clearly, honestly, and without fabrication, falsification or inappropriate data manipulation (including image based manipulation). Authors should adhere to discipline-specific rules for acquiring, selecting and processing data.

- No data, text, or theories by others are presented as if they were the author’s own (‘plagiarism’). Proper acknowledgements to other works must be given (this includes material that is closely copied (near verbatim), summarized and/or paraphrased), quotation marks (to indicate words taken from another source) are used for verbatim copying of material, and permissions secured for material that is copyrighted.